Limiting the impact on production performance, improving capacity utilization, and enabling high-density hard disk drives are just a few examples of the ROI of Fast RAID Rebuilds. RAID rebuild time is the time it takes for a storage system to return itself to a protected state after a drive failure. The time window is critical because if another drive fails during this time, there may be complete data loss. Also, the rebuilding effort on most storage systems will significantly impact production performance. Slow rebuilds require IT to take extra measures, which cost money, to limit the organization’s exposure.

The Anatomy of Fast RAID Rebuilds

To improve RAID rebuilds, most vendors focus on the media, forcing customers to use either flash drives or capping the density of the hard drive their systems use. The problem is not the media and, with the correct algorithm, it has a minor role to play in the rebuilding effort.

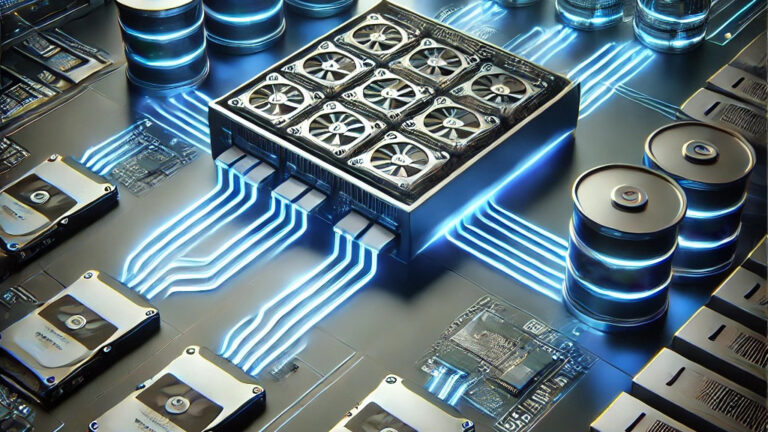

Fast RAID rebuilds require three fundamental elements. First, the storage solution needs to be able to read data from the surviving media as fast as the media can deliver it. Second, the storage solution needs to execute a complex mathematical operation to determine what data was on the failed drive via parity. In straightforward terms, it is solving for “x.” Then finally, the storage solution needs to write data as fast as possible to the surviving drives.

Most legacy storage solutions, be they all-flash, hybrid, or hard disk only, don’t own the IO path. They leverage open source or public domain file systems and code to get to market quickly. They could not improve IO performance to a drive even if they had the capability. As a result, most “improvements” in RAID rebuild times occur because the vendor is using faster media, so all-flash systems are mistakenly seen as a fix for long RAID rebuild times. The vendor didn’t change their legacy code (because they can’t); they just leveraged a faster technology.

Designing Fast RAID Rebuilds Algorithms

The first step in fast rebuilds is to make sure a drive failure protection algorithm is in place which enables the system to read and write data from all the surviving drives. Most modern RAID algorithms do read data from all available drives, but the overwhelming majority do not write data to all surviving drives. In most cases, these systems have to set aside (reserve) drives, called hot spares. During a rebuilding process, they will activate one of these hot spares, and all the recalculated data is written to that single drive, which will bottleneck the recovery process.

The data protection algorithm also needs to be intelligent in determining what data is missing from the drive. Most algorithms are only granular to the drive level, not the data level, which means a 20TB hard disk drive with 1TB of data on it is as slow to recover as a 20TB drive with 19TBs of data on it.

The algorithm not only needs to intelligently use surviving drives and intelligently determine which data needs to be recreated, but it also needs to be efficient so its execution doesn’t impact production performance while the rebuilding process is executing. Ideally, the entire storage software component is efficient, so there is plenty of available CPU power to execute the rebuild algorithm while still having plenty of resources to maintain application performance.

Read and Write Performance Impact Fast RAID Rebuilds

Armed with an algorithm that enables all surviving drives to be read and, more importantly, to be written to during the rebuilding process also enables fast RAID rebuilds. Again, the problem is most legacy vendors don’t own the IO path to the media and can’t do much to improve the read and write performance of their storage systems other than forcing their customers to buy faster and more expensive media. If they continue to innovate, vendors that own the IO path can significantly improve IO performance and enable the software to extract a much higher percentage of the media’s theoretical maximum.

IT must look for vendors that own the IO path and the drive failure protection algorithm. Ownership of both the IO path and the drive failure algorithm means the vendor can coordinate the effort to deliver the fastest possible rebuilds in the shortest possible amount of time. The result should be hard disk drive recovery times of a few hours and flash recovery times of a few minutes while delivering high-performance to the organization’s applications and users.

Fast RAID Rebuilds Save Money

A storage software solution that addresses all three elements of drive recovery can enable customers to lower costs and accelerate the return on investment of the storage system. A sub-three-hour recovery time from a hard disk failure means customers can use the newest high-density hard disk drives without fear. A 20TB hard disk drive, is more than 10X less expensive than its 16TB flash equivalent.

Slow recovery times also lead most customers to increase their drive redundancy settings. The concern is if a second drive fails while the rebuild process is executing it can result in data loss and significant downtime. A multi-day recovery effort increases those chances of data loss. Dual parity RAID is commonplace today, and triple parity RAID (three simultaneous drive failures) is gaining in popularity. The problem with increasing drive redundancy is it lowers utilization levels and, in most cases, with legacy RAID algorithms, negatively impacts performance.

A modern storage solution should provide the flexibility to select as many drives for redundancy as IT requires without impacting performance. However, fast recovery times enable organizations to allocate fewer drives to redundancy. There is significantly less chance of multiple drives failing during a three-hour recovery than multiple-day recovery. These solutions should also execute the rebuilding process without impacting production performance. A modern algorithm enables IT to tailor drive failure protection on an application basis to balance protection and utilization properly.

The modern storage algorithm manages data, not drives, and is abstracted from the underlying hardware. It provides the additional benefit of flexible expansion, which eliminates storage refreshes and data migration. As both flash and hard disk densities continue to increase, the modern storage algorithm can integrate new, higher density drives and use them to their full capacity without requiring new volumes, forcing IT to migrate data from old to new.

Learn More

StorONE spent its first eight years rewriting the foundation of storage IO and building its storage engine, optimizing IO for maximum efficiency. With StorONE, you can extract more performance and achieve greater scale in a much smaller, more cost-effective footprint.

Click to learn more about our Enterprise Storage Platform or our advanced drive failure protection technology, vRAID.

An area where our optimized engine shows immediate value is backup storage; join us live next week for our webinar “Busting the Four Myths that Increase Backup Storage Costs.”